Overview

If you work with AI models, you've likely encountered this frustrating scenario: you set a model's temperature parameter to zero, expecting a perfectly deterministic and repeatable output, only to run the exact same inference again and receive a slightly different result. It's a common experience that leaves many researchers and developers scratching their heads, wondering what setting they missed or if there's a bug in the high-level code.

The reality is that this isn't a simple software bug. The source of this variability is much deeper and more fundamental, rooted in the way computers themselves handle mathematics. This isn't about random seeds or faulty logic in a Python script; it's about a core characteristic of computer hardware that has profound implications for the entire field of machine learning.

This talk explores the surprising and counter-intuitive concept of "floating-point non-associativity." We'll break down what it is, why it exists, and how this low-level hardware quirk has a massive impact on the reproducibility, performance, and even the accuracy of the AI models we rely on every day.

1. The Core Problem: Computer Math Isn't Always Associative

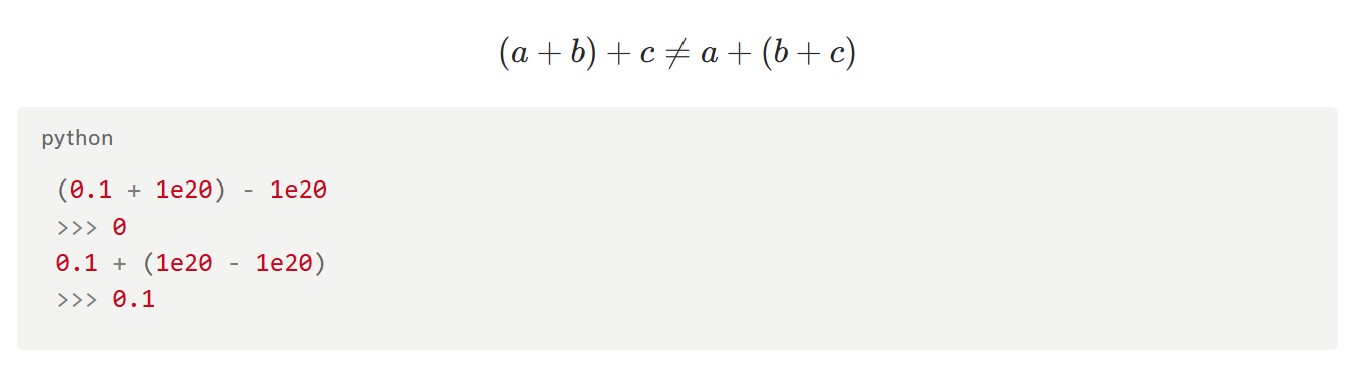

In simple terms, floating-point non-associativity means that when adding a series of numbers with

decimal points, the order of operations can change the final answer. For example, consider the

calculation (1,000,000,000 + -1,000,000,000) + 0.1, which correctly results in 0.1.

However, if you change the order to (1,000,000,000 + 0.1) + -1,000,000,000, the

computer might produce 0. This happens because computers only store a fixed number of digits. When

adding 0.1 to one billion, the computer recognizes the tiny number is insignificant to the total and

effectively "erases" it before the final addition takes place.

Visual demonstration of how operation order affects floating-point arithmetic results

This behavior is fundamentally different from the math we learn with whole numbers (integers), where the order of addition never matters. This distinction is critical for machine learning because AI models perform a massive number of floating-point additions.

To maximize speed, modern GPUs are designed to run these additions in parallel and in a non-deterministic order. The exact sequence of calculations can change from one run to the next, meaning the same overall computation can yield slightly different results each time.

2. The Surprising Source of AI's "Randomness"

This non-associativity is a major, often overlooked, cause of non-determinism in AI models. Even when

high-level settings like temperature=0 are used to eliminate randomness in token

selection, this underlying hardware behavior persists. The non-deterministic order of parallel

additions happening deep within the GPU is enough to cause small variations that cascade into

different final outputs.

This creates significant challenges for researchers who need to conduct controlled experiments or debug model behavior. Even when they meticulously create testing environments designed to eliminate randomness, this hardware-level issue undermines their efforts. This makes reproducibility a constant struggle for the research community.

"It's very difficult for researchers to actually try to create these reproducible environments..."

3. The Hidden Trade-Off: Perfect Reproducibility Is Incredibly Slow

This issue creates a fundamental trade-off between performance (speed) and determinism (reproducibility). GPUs achieve their incredible speed by executing thousands of operations in parallel and allowing the order of additions to change based on which calculation finishes first. This out-of-order execution is a core part of their design philosophy.

It is possible to force a GPU to perform additions in a fixed, deterministic sequence using an operation known as an "atomic add." However, this forces a parallel processor to work sequentially, creating a computational bottleneck that is fundamentally at odds with its architecture. The result is significant performance degradations.

Research examining this effect found that a deterministic implementation on a powerful H100 GPU experienced an almost 2x slowdown compared to the standard, non-deterministic version. This highlights the steep price of guaranteed reproducibility: in the world of large-scale AI, cutting your computational speed in half is a massive cost.

4. The Scale of a "Tiny" Error Is Shockingly Large

While the individual rounding errors are minuscule, their cumulative effect over the trillions of calculations in a model's training process can be enormous. One of the most surprising findings from research on this topic is that running non-deterministic training and inference can cause a massive divergence in the final model weights.

Up to 20% of the model weights will be different

This is a shocking figure. It means that two models, trained on the exact same data with the exact same settings, can end up with one-fifth of their internal parameters being completely different. Yet, research also highlights a fascinating paradox: despite this huge variability in their internal structure, the models "converge to similar loss values." This suggests that, at a theoretical level, the variability doesn't have a massive effect on the model's functional accuracy.

This strange reality suggests that neural networks possess a remarkable degree of redundancy, where countless different internal configurations can lead to the same functional outcome. The models still "work," but they are fundamentally unique and non-reproducible, making tasks like debugging and controlled experimentation incredibly challenging.

5. You Can't Fix This With a "Random Seed"

This hardware-level variability is different from other sources of randomness in machine learning. Many stochastic processes in AI, such as weight initialization or token sampling, can be controlled by setting a specific "random seed." This ensures that anyone using the same seed will get the exact same sequence of "random" numbers, making the process repeatable.

However, you cannot "seed" the physical execution order of parallel additions on a GPU. This source of non-determinism is a product of the hardware's architecture and runtime conditions, which are outside of a user's direct control. This makes it a much more stubborn problem to solve.

"The resulting thousand models are completely non-reproducible even for a single user on a single machine."

Hardware Comparisons: NVIDIA H100 vs. Groq LPU

The session analyzes how GPU execution and hardware design influence accuracy, speed, and reproducibility. Specifically, it compares the NVIDIA H100 GPU with the Groq LPU (Language Processing Unit), highlighting the trade-offs between deterministic performance and computational efficiency.

Experiments on PyTorch kernels and hardware-level comparisons demonstrate that purpose-built hardware can flip this trade-off. The Groq LPU, designed with deterministic implementations in mind, shows that deterministic operations can actually be faster than a non-deterministic GPU's. In fact, Groq doesn't even offer a non-deterministic mode, making reproducibility a core architectural feature, not a costly add-on.

Conclusion: Building a More Predictable Future

The frustrating "randomness" you see in AI outputs, even with deterministic settings, is often not a bug but a feature of the hardware it runs on. A fundamental quirk in how computers perform floating-point arithmetic, combined with the parallel processing that makes modern AI possible, is a major driver of non-reproducibility. This creates a difficult trade-off between computational speed and scientific rigor.

Fortunately, awareness of this issue is leading to new solutions. The industry is seeing the emergence of purpose-built hardware that prioritizes determinism. This raises a crucial question for the future of the field: As AI becomes more critical in science, medicine, and engineering, how many other "invisible" hardware limitations will we need to overcome, and could purpose-built, deterministic hardware be the key to truly reliable artificial intelligence?